This tutorial will only be focusing on the intranet searching part for simplicity.

Intranet Configuration

(Source: Nutch Website)

To configure things for intranet crawling you must:

1) Create a directory with a flat file of root urls. For example, to crawl the nutch site you might start with a file named urls/nutch containing the url of just the Nutch home page. All other Nutch pages should be reachable from this page. The urls/nutch file would thus contain:

http://lucene.apache.org/nutch/

Note: To start crawling from more than one url, you can add in more files containing the urls to be crawled.

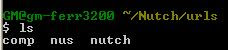

For example, in the urls folder, I have three flat files containing the url of SOC's homepage, NUS's homepage and Nutch's homepage respectively.

2) Edit the file conf/crawl-urlfilter.txt and replace MY.DOMAIN.NAME with the name of the domain you wish to crawl. For example, if you wished to limit the crawl to the apache.org domain, the line should read:

+^http://([a-z0-9]*\.)*apache.org/This will include any url in the domain apache.org.

Edit the file conf/nutch-site.xml, insert at minimum following properties into it and edit in proper values for the properties:

For example, the nutch-site.xml file will include something like that below. You will need to input some information between the value and /value part.

The template for the xml file is located in the Nutch Tutorial.

Sidenote: I am using an image because I can't seem to get blogspot to

publish the text without removing the tags. Anyone knows how?

Intranet Crawling

(Source: Nutch Website)

Once things are configured, running the crawl is easy. Just use the crawl command. Its options include:

- -dir dir names the directory to put the crawl in.

- -threads threads determines the number of threads that will fetch in parallel.

- -depth depth indicates the link depth from the root page that should be crawled.

- -topN N determines the maximum number of pages that will be retrieved at each level up to the depth.

For example, a typical call might be:

bin/nutch crawl urls -dir crawl -depth 3 -topN 50Typically one starts testing one's configuration by crawling at shallow depths, sharply limiting the number of pages fetched at each level (-topN), and watching the output to check that desired pages are fetched and undesirable pages are not. Once one is confident of the configuration, then an appropriate depth for a full crawl is around 10. The number of pages per level (-topN) for a full crawl can be from tens of thousands to millions, depending on your resources.

Note: During the searching later, the webapp will search the for the folder crawl relative to where Tomcat is started, unless the searcher directory is set (will be covered later). So for simplicity, you can just place the output of the crawl to crawl.